C#爬虫初探

前言

最近想学一下C#的winform。然后打算造个轮子搞搞

过程

C#发起Http请求用的是using System.Net,利用WebRequest

Example

using System;

using System.Collections.Generic;

using System.Linq;

using System.Text;

using System.Threading.Tasks;

using System.Net;

using System.IO;

namespace ConsoleApplication47

{

class Program

{

static void Main(string[] args)

{

string url = "http://www.baidu.com/";

HttpWebRequest data = (HttpWebRequest)WebRequest.Create(url); //创建请求的URL

data.UserAgent = "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/87.0.4280.88 Safari/537.36"; //设置UA头

data.Method = "GET"; //设置请求的方式

HttpWebResponse response = (HttpWebResponse)data.GetResponse(); //获取响应

Encoding enc = Encoding.GetEncoding("utf-8"); //实例化一个编码

StreamReader sr = new StreamReader(response.GetResponseStream(), enc); //读取响应流并编码为UTF-8

var html = sr.ReadToEnd(); //从编码后的响应流完整读取

Console.WriteLine(html);

Console.ReadLine();

}

}

}

参考链接:

https://blog.csdn.net/ksr12333/article/details/48845417

https://crifan.github.io/crawl_your_data_spider_technology/website/how_write_spider/use_csharp/

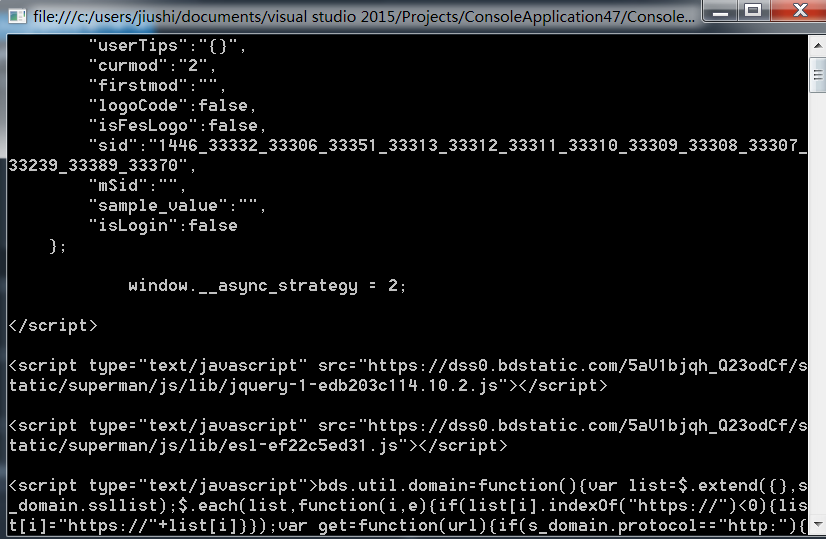

结果如下

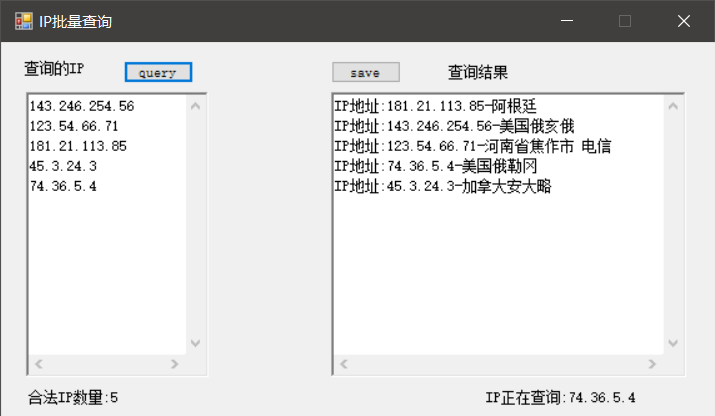

这里拿baidu查询一个IP的归属地作为本次的目的

这里查询的IP其实是baidu调用ip138.com的接口查询,如果你直接去爬ip138速度快的话一会就封IP了。

但是通过baidu去查则不会,虽然用多线程去请求baidu。快一点会出现baidu安全,其实带个cookie就能绕了

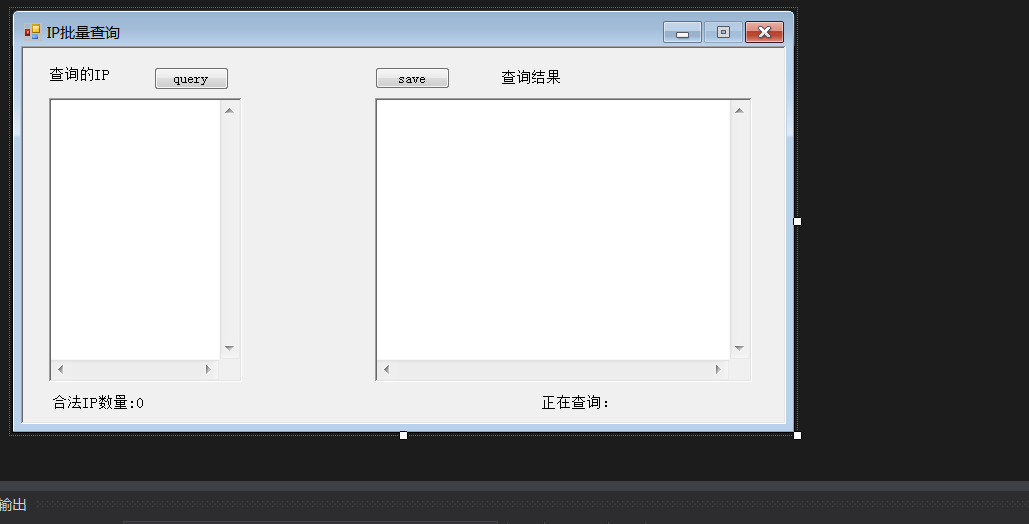

UI如下

这里值得注意的东西

- 多线程处理UI的时候如何解决非原线程访问UI

- UI的内容如何及时输出

所以具体代码如下

using System;

using System.Collections.Generic;

using System.ComponentModel;

using System.Data;

using System.Drawing;

using System.Linq;

using System.Text;

using System.Threading.Tasks;

using System.Windows.Forms;

using System.IO;

using System.Net;

using System.Text.RegularExpressions;

using System.Threading;

namespace WindowsFormsApplication5

{

public partial class Form1 : Form

{

// static ReaderWriterLockSlim LogWriteLock = new ReaderWriterLockSlim();

public Form1()

{

// Control.CheckForIllegalCrossThreadCalls=false;

InitializeComponent();

}

public void query(object tmp) {

string ip = tmp.ToString();

this.Invoke((EventHandler)delegate { this.label4.Text = "IP正在查询:" + ip; });

string url = "http://www.baidu.com/s?ie=utf-8&f=8&rsv_bp=1&rsv_idx=1&tn=baidu&wd=" + ip;

HttpWebRequest req = (HttpWebRequest)WebRequest.Create(url);

req.Headers.Add("cookie", "PSTM=1598200102; BAIDUID=73A08DA2B8A1C47748FE6E0263B2D7FC:FG=1; BIDUPSID=D1A9BC856D198D087B62FE6369A51D38; BD_UPN=12314753; sug=3; ORIGIN=2; bdime=0; sugstore=1; H_PS_PSSID=1453_33331_33306_31660_32973_33285_33287_33350_33313_33312_33311_33310_33309_33318_33308_33307_33239_33266_33389_33384; BDORZ=B490B5EBF6F3CD402E515D22BCDA1598; __yjs_duid=1_2bbdf988e437a50cf1e9527aeb11331f1608818232011; delPer=0; BD_CK_SAM=1; PSINO=6; COOKIE_SESSION=22_1_9_8_31_4_0_0_8_4_1_0_27_0_7_0_1608820717_1608280511_1608820710%7C9%23347061_47_1608280509%7C9; ZD_ENTRY=baidu; BD_HOME=1; BAIDUID_BFESS=F0D55FAB35899A68F91651F30B80AA72:FG=1; H_PS_645EC=7084MH7oreen2FkWL7qh5SKWiBZPRoo%2BRDJ4tyV3Dtv8JX%2BpHwi%2FAw83RfE; BA_HECTOR=8h000g848ka4akak7v1fu9k6f0q");

req.Method = "GET";

try

{

HttpWebResponse response = (HttpWebResponse)req.GetResponse();

Encoding enc = Encoding.GetEncoding("utf-8");

StreamReader sr = new StreamReader(response.GetResponseStream(), enc);

string html = sr.ReadToEnd();

sr.Close();

Regex zz = new Regex(@"IP地址:.*");

var ips = zz.Matches(html);

if (ips.Count > 0)

{

string data = ips[0].ToString().Replace(" ", "").Replace("</span>", "-");

this.Invoke((EventHandler)delegate

{

result.Text += data.ToString() + "\n";

result.Refresh();

result.SelectionStart = result.Text.Length;

result.ScrollToCaret();

});

}

else

{

this.Invoke((EventHandler)delegate

{

result.Text += "IP:" + ip + "查询失败\n";

result.Refresh();

result.SelectionStart = result.Text.Length;

result.ScrollToCaret();

});

}

}

catch {

this.Invoke((EventHandler)delegate

{

result.Text += "IP:"+ip+"请求失败\n";

result.Refresh();

result.SelectionStart = result.Text.Length;

result.ScrollToCaret();

});

}

}

private void button2_Click(object sender, EventArgs e)

{

List<string> iplist = new List<string>();

string []data = richTextBox1.Text.Split('\n');

foreach (var ip in data)

{

Regex pd = new Regex("(25[0-5]|2[0-4]\\d|[0-1]\\d{2}|[1-9]?\\d)\\.(25[0-5]|2[0-4]\\d|[0-1]\\d{2}|[1-9]?\\d)\\.(25[0-5]|2[0-4]\\d|[0-1]\\d{2}|[1-9]?\\d)\\.(25[0-5]|2[0-4]\\d|[0-1]\\d{2}|[1-9]?\\d)");

if (pd.Match(ip).ToString() != "")

{

iplist.Add(ip);

}

}

label3.Text = "合法IP数量:" + iplist.Count;

label3.Refresh();

foreach (var ip2 in iplist) {

label4.Refresh();

Thread t=new Thread(new ParameterizedThreadStart(query));

t.Start(ip2);

}

label4.Text = "查询完成";

label4.Refresh();

}

private void button1_Click(object sender, EventArgs e)

{

try

{

File.WriteAllText("save.txt", result.Text);

MessageBox.Show("保存文件成功");

}

catch {

MessageBox.Show("保存文件失败");

}

}

}

}

最后测试结果

转载请注明来源,欢迎对文章中的引用来源进行考证,欢迎指出任何有错误或不够清晰的表达。

文章标题:C#爬虫初探

本文作者:九世

发布时间:2020-12-26, 12:11:54

最后更新:2020-12-26, 12:56:10

原始链接:http://jiushill.github.io/posts/ec93c081.html版权声明: "署名-非商用-相同方式共享 4.0" 转载请保留原文链接及作者。